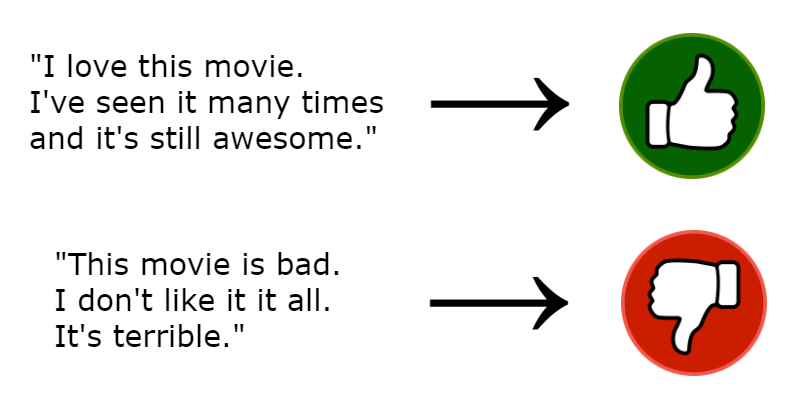

Sentiment Analysis

We are going to classify a movie review as Positive or Negative review given a text review.

We’ll use the IMDB dataset that contains the text of 50,000 movie reviews from the Internet Movie Database. These are split into 25,000 reviews for training and 25,000 reviews for testing. The training and testing sets are balanced, meaning they contain an equal number of positive and negative reviews.

IMDB Dataset

Download the Dataset

import tensorflow as tf

imdb = tf.keras.datasets.imdb

(train_data, train_labels), (test_data, test_labels) = imdb.load_data(num_words=10000)

Explore the Dataset

train_data.shape, train_labels.shape, test_data.shape, test_labels.shape

((25000,), (25000,), (25000,), (25000,))

len(train_data[0])

218

Each of these numbers correspond to a word.

word_2_int = tf.keras.datasets.imdb.get_word_index(path='imdb_word_index.json')

word_2_int['hello'], word_2_int['world']

(4822, 179)

len(word_2_int)

88584

def sentence_2_int(sentence):

sentence_2_int_list = []

for i in sentence.lower().split(' '):

sentence_2_int_list.append(word_2_int[i])

return sentence_2_int_list

sentence = "Worst movie i've ever seen"

sentence_2_int(sentence)

[246, 17, 204, 123, 107]

Preparing the data

Padding

Every sentence will be off different length, to pass the sentences through the ANN model, we need to have a fixed length data. So we

- pad small sentences

- cut very long sentences to a fixed length

pad_value = 0

sentence_len = 100

train_data = tf.keras.preprocessing.sequence.pad_sequences(train_data,

value=0,

padding='post',

maxlen=sentence_len)

test_data = tf.keras.preprocessing.sequence.pad_sequences(test_data,

value=0,

padding='post',

maxlen=sentence_len)

train_data.shape, train_labels.shape, test_data.shape, test_labels.shape

((25000, 100), (25000,), (25000, 100), (25000,))

ANN Model

We will use a new layer into our model called the Embedding layer.

The words of each sentence are now represented with numbers, we cannot directly feed those numbers into the model, we need to convert the numbers into vectors for the model to understand what word it is?

but how do we decide the vectors? we leave that to the model.

So embedding layer, takes in an integer and converts it into a vector.

vocab_size = 10000

embedding_dim = 16

import tensorflow as tf

from tensorflow import keras

tf.keras.backend.clear_session()

model = tf.keras.Sequential([

tf.keras.layers.Embedding(vocab_size, embedding_dim, input_length=sentence_len),

# the model will take as input an integer matrix of size (batch, input_length).

# now model.output_shape == (None, 100, 16), where None is the batch dimension.

tf.keras.layers.Dropout(0.4),

tf.keras.layers.Flatten(),

tf.keras.layers.Dense(units=sentence_len*embedding_dim),

tf.keras.layers.Activation('relu'),

tf.keras.layers.Dropout(0.4),

tf.keras.layers.Dense(units=500),

tf.keras.layers.Activation('relu'),

tf.keras.layers.Dropout(0.4),

tf.keras.layers.Dense(units=1),

tf.keras.layers.Activation('sigmoid')

])

model.summary()

Model: "sequential"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

embedding (Embedding) (None, 100, 16) 160000

_________________________________________________________________

dropout (Dropout) (None, 100, 16) 0

_________________________________________________________________

flatten (Flatten) (None, 1600) 0

_________________________________________________________________

dense (Dense) (None, 1600) 2561600

_________________________________________________________________

activation (Activation) (None, 1600) 0

_________________________________________________________________

dropout_1 (Dropout) (None, 1600) 0

_________________________________________________________________

dense_1 (Dense) (None, 500) 800500

_________________________________________________________________

activation_1 (Activation) (None, 500) 0

_________________________________________________________________

dropout_2 (Dropout) (None, 500) 0

_________________________________________________________________

dense_2 (Dense) (None, 1) 501

_________________________________________________________________

activation_2 (Activation) (None, 1) 0

=================================================================

Total params: 3,522,601

Trainable params: 3,522,601

Non-trainable params: 0

_________________________________________________________________

optimizer = tf.keras.optimizers.Adam(lr=0.001)

model.compile(optimizer=optimizer, loss='binary_crossentropy', metrics=['accuracy'])

tf_history = model.fit(train_data, train_labels, batch_size=2000, epochs=10, verbose=True, validation_data=(test_data, test_labels))

Train on 25000 samples, validate on 25000 samples

Epoch 1/10

25000/25000 [==============================] - 12s 489us/sample - loss: 0.6917 - acc: 0.5162 - val_loss: 0.6860 - val_acc: 0.5558

Epoch 2/10

25000/25000 [==============================] - 12s 485us/sample - loss: 0.6434 - acc: 0.6450 - val_loss: 0.5741 - val_acc: 0.7036

Epoch 3/10

25000/25000 [==============================] - 12s 482us/sample - loss: 0.4729 - acc: 0.7753 - val_loss: 0.4278 - val_acc: 0.8019

Epoch 4/10

25000/25000 [==============================] - 12s 487us/sample - loss: 0.3406 - acc: 0.8524 - val_loss: 0.3833 - val_acc: 0.8292

Epoch 5/10

25000/25000 [==============================] - 12s 483us/sample - loss: 0.2646 - acc: 0.8873 - val_loss: 0.3691 - val_acc: 0.8388

Epoch 6/10

25000/25000 [==============================] - 12s 482us/sample - loss: 0.2038 - acc: 0.9180 - val_loss: 0.3913 - val_acc: 0.8380

Epoch 7/10

25000/25000 [==============================] - 12s 484us/sample - loss: 0.1641 - acc: 0.9350 - val_loss: 0.4083 - val_acc: 0.8380

Epoch 8/10

25000/25000 [==============================] - 12s 483us/sample - loss: 0.1318 - acc: 0.9490 - val_loss: 0.4307 - val_acc: 0.8374

Epoch 9/10

25000/25000 [==============================] - 12s 484us/sample - loss: 0.1029 - acc: 0.9595 - val_loss: 0.4761 - val_acc: 0.8348

Epoch 10/10

25000/25000 [==============================] - 12s 485us/sample - loss: 0.0871 - acc: 0.9660 - val_loss: 0.4966 - val_acc: 0.8368

model.save('trained_model.h5')

Model Pipeline

Load trained Model

trained_model = tf.keras.models.load_model('trained_model.h5')

trained_model.summary()

WARNING:tensorflow:From /usr/local/lib/python3.6/dist-packages/tensorflow/python/ops/init_ops.py:97: calling GlorotUniform.__init__ (from tensorflow.python.ops.init_ops) with dtype is deprecated and will be removed in a future version.

Instructions for updating:

Call initializer instance with the dtype argument instead of passing it to the constructor

WARNING:tensorflow:From /usr/local/lib/python3.6/dist-packages/tensorflow/python/ops/init_ops.py:97: calling Zeros.__init__ (from tensorflow.python.ops.init_ops) with dtype is deprecated and will be removed in a future version.

Instructions for updating:

Call initializer instance with the dtype argument instead of passing it to the constructor

Model: "sequential"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

embedding (Embedding) (None, 100, 16) 160000

_________________________________________________________________

dropout (Dropout) (None, 100, 16) 0

_________________________________________________________________

flatten (Flatten) (None, 1600) 0

_________________________________________________________________

dense (Dense) (None, 1600) 2561600

_________________________________________________________________

activation (Activation) (None, 1600) 0

_________________________________________________________________

dropout_1 (Dropout) (None, 1600) 0

_________________________________________________________________

dense_1 (Dense) (None, 500) 800500

_________________________________________________________________

activation_1 (Activation) (None, 500) 0

_________________________________________________________________

dropout_2 (Dropout) (None, 500) 0

_________________________________________________________________

dense_2 (Dense) (None, 1) 501

_________________________________________________________________

activation_2 (Activation) (None, 1) 0

=================================================================

Total params: 3,522,601

Trainable params: 3,522,601

Non-trainable params: 0

_________________________________________________________________

Sentence to Vectors

import numpy as np

def sentence_2_int(sentence):

sentence_2_int_list = []

for i in sentence.lower().split(' '):

sentence_2_int_list.append(word_2_int[i])

arr = np.array(sentence_2_int_list).reshape(1,-1)

arr = tf.keras.preprocessing.sequence.pad_sequences(arr, value=0, padding='post', maxlen=100)

return arr

sentence = "Worst movie i've ever seen"

sentence_2_int(sentence)

array([[246, 17, 204, 123, 107, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0]], dtype=int32)

model.predict(sentence_2_int(sentence))

array([[0.22403198]], dtype=float32)

def sentence_2_prediction(sentence):

vector = sentence_2_int(sentence)

prob = model.predict(vector)

prediction = prob > 0.5

if prediction == 1:

print('Positive Review :D')

else:

print('Negative Review :(')

sentence = 'Good Movie i really enjoyed it'

sentence_2_prediction(sentence)

Positive Review :D

sentence = 'worst movie'

sentence_2_prediction(sentence)

Negative Review :(

If you try many different sentences, you may notice the model actually doesn’t perform well. There may be many reasons for it.

- vocabulary size

- sequence length(no of words in a sentence for padding)

- model architecture

- embedding dim

Train the model by changing all the above to improve its performance.